This repo contains a character-level language model (LM) with one LSTM cell, heavily inspired by Andrej Karpathy's excellent blog 'The Unreasonable Effectiveness of Recurrent Neural Networks'. If you're interested in stuff like that, I highly encourage you to read through the blog.

The model is trained with the pytorch library.

There are a couple of different datasets available in this repository to train the LM on. English news, German news, python source code.

They are stored in the data folder. (I didn't upload it here, but I referenced it at the end of this readme. Except for the python dataset, I couldn't find the source for that one...)

Feel free to clone the repo and use your own dataset to generate some text :-)

At the beginning of the notebook, there are a couple of cells which load different datasets. You want to preprocess your data so that it consist of one large string and then call the get_train_test_split helper method.

In the first couple of cells in the notebook, there are some hyperparameters to configure, like batch size or sequence length.

The training procedure of the model is as follows:

The input feature is one-hot encoded vector, where each position in the vector represents one character. The size of this one-hot encoded vector depends on the dataset used to train the model (as for example in German, there exist characters like ä, ö, etc. whereas in English these don't exist. Therefore, it wouldn't make any sense to use them in the one-hot encoding.)

Then, the training data is split into sequences of the length specified in the beginning of the notebook. The model is trained to predict the next character, based on the previous characters. Hence, the input to the model and the ground truth are shifted to the right by one character. There's a very nice illustration for that kind of training in Karpathy's blog post.

The loss function used is the cross-entropy loss.

The dimensions of the input data for the LSTM cell can be found here: https://pytorch.org/docs/master/generated/torch.nn.LSTM.html

In this section I'm going to briefly show some results of what the model is capable of.

Here are some examples of text which the model generated, after different number of training epochs. The input to the model was the word 'Yesterday'. The rest of the output was generated by the model, based on the previous output, character by character. So after the model predicted the next character after 'Yesterday', it was appended to the string 'Yesterday' and then the new string was fed into the network.

For this example, I've used the following (hyper)-parameters:

- a sequence of 30 characters

- batch size of 128

- the training data consisted of 2147136 characters (so only a part of the whole english news dataset was used)

- dimension of the hidden state: 768

- optimizer: Adam (with a learning rate of 0.001)

Yesterday the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and the s and ...

Yesterday of the contral to the contrace that the contral to the contrace that the contral to the contrace that the contral to the contrace that the contral to the contrace that the contral to the contrace that the contral to the contrace that the contral to the contrace ...

Yesterdayon and the country was the state of the country with the country with the country with the country with the country with the country with the country with the country with the country with the country with the country with the country with the country with the ...

Yesterdayon Committee on Friday, the prospects of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state of the same time of the state

Yesterday, who was the state of the same time, the country was the first time the country has been struggling to repeal the new law. “The mood replace its business of the state of the state of the same way and the first time the same concert from the streets will be insurance for the strategic in the same protest your confirmation has been surprised by the state of the state of the same way and the first time the same concert from the streets will be insurance for the strategic in the same protest your c

Yesterday. An of the country as a new lape of the prosecutor and the first time that a commission ecrool in the state of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state of Mr. Trump’s cabinet picks of the state

Yesterday, a production of the Clintons deserver the study of the process. ” Mr. Schulbac said on Thursday night before the election and at the state has the opportunity to the law, as he has shot even there are leaving outside to become a big staff, deplaying officers often the story found in fall on an underway although its state of the state and was still electricity. ” The results of the Senate committee, which would exploit them to the company’s office, and the special prosecutor and admiral even mo

It's very interesting to see, that the model basically learned to write (mostly) correct words starting from basically nothing. It only had some news articles for that. The generated text doesn't really make any sense, but it more or less sounds like a news article.

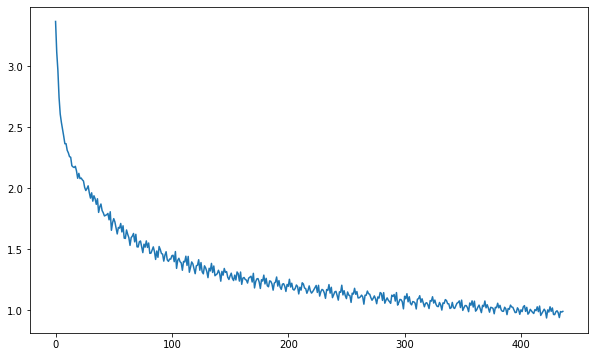

In this plot we can see the loss during training. The x-axis doesn't really have a meaning, it's just the loss over time.

That's what the model produced after 40 epochs of training on python source code, given the character #. The code wouldn't run obviously, but again, it does look like python code.

# The flag with encluding instance of the above copyright notice and then interface code -->

if self.class_gc_exact != None:

return re.compile(r"<h(1, / %s, %s" % (gccess):

return s

def replaceHTML(file):A key difference between the news articles and the source code is that the source code contains approximately 3 times more unique characters than the news article. So it would probably need more data to do that. I didn't train on the whole python dataset (~1GB), because of the RAM limitations of my computer. One could solve that issue by using a generator to load batches.

- Generation: Replace greedy sampling with beam search to prevent repeated sampling of same text (Blog article about different sampling-techniques by Huggingface)

- Use perplexity to evaluate the model

- Use more than one LSTM cells (stack them onto each other)

- Use regularization techniques like dropout

- Usage of a generator to use the whole python dataset.

- German news dataset: https://tblock.github.io/10kGNAD/

- English news dataset: https://www.kaggle.com/snapcrack/all-the-news

- Python source code dataset: Unfortunately, I couldn't find the source for the python dataset anymore. I had downloaded it a couple of years ago... Will update it as soon as I find it.