Detects potential signs of overwork in incident responders, which could lead to burnout. To compute a per-responder risk score, it integrates with Rootly, PagerDuty, GitHub, and Slack.

Two ways to get started:

- Use our hosted version www.oncallhealth.ai (contains mock data to easily test it out)

- Host it locally

Use our Docker Compose file.

# Clone the repo

git clone https://github.com/Rootly-AI-Labs/on-call-health

cd on-call-health

# Launch with Docker Compose

docker compose up -d

# Create a copy of the .env file

cp backend/.env.example backend/.env

📝 Instructions to get token for Google Auth

- Enable Google People API

- Get your tokens

- Create a new project

- In the ** Overview ** tab, click on ** Get started ** button and fill out info

- Go to the ** Clients ** tab and click on ** + Create client ** button

- Set ** Appliecation type ** to ** Web application **

- Set ** Authorized redirect URIs ** to ** http://localhost:8000/auth/google/callback **

- Keep the pop-up that contains your ** Client ID ** and ** Client secret ** open

- Fill out the variable

GOOGLE_CLIENT_IDandGOOGLE_CLIENT_SECRETin yourbackend/.envfile - Restart backend

docker compose restart backend

📝 Instructions to get token for GitHub Auth

- Visit https://github.com/settings/developers

- Click OAuth Apps → New OAuth App

- Application name: On-Call Health

- Homepage URL: http://localhost:3000

- Authorization callback URL: http://localhost:8000/auth/github/callback

- Create the app:

- Click Register application

- You'll see your Client ID

- Click Generate a new client secret to get your Client Secret

- Add to

backend/.env: - Restart backend:

You can also set it up manually, but this method isn't actively supported.

- Python 3.11+

- Node.js 18+ (for frontend)

- Rootly or PagerDuty API token

cd backend

python -m venv venv

source venv/bin/activate # or `venv\Scripts\activate` on Windows

pip install -r requirements.txt

# Copy and configure environment

cp .env.example .env

# Edit .env with your configuration

# Run the server

python -m app.mainThe API will be available at http://localhost:8000

cd frontend

npm install

npm run devThe frontend will be available at http://localhost:3000

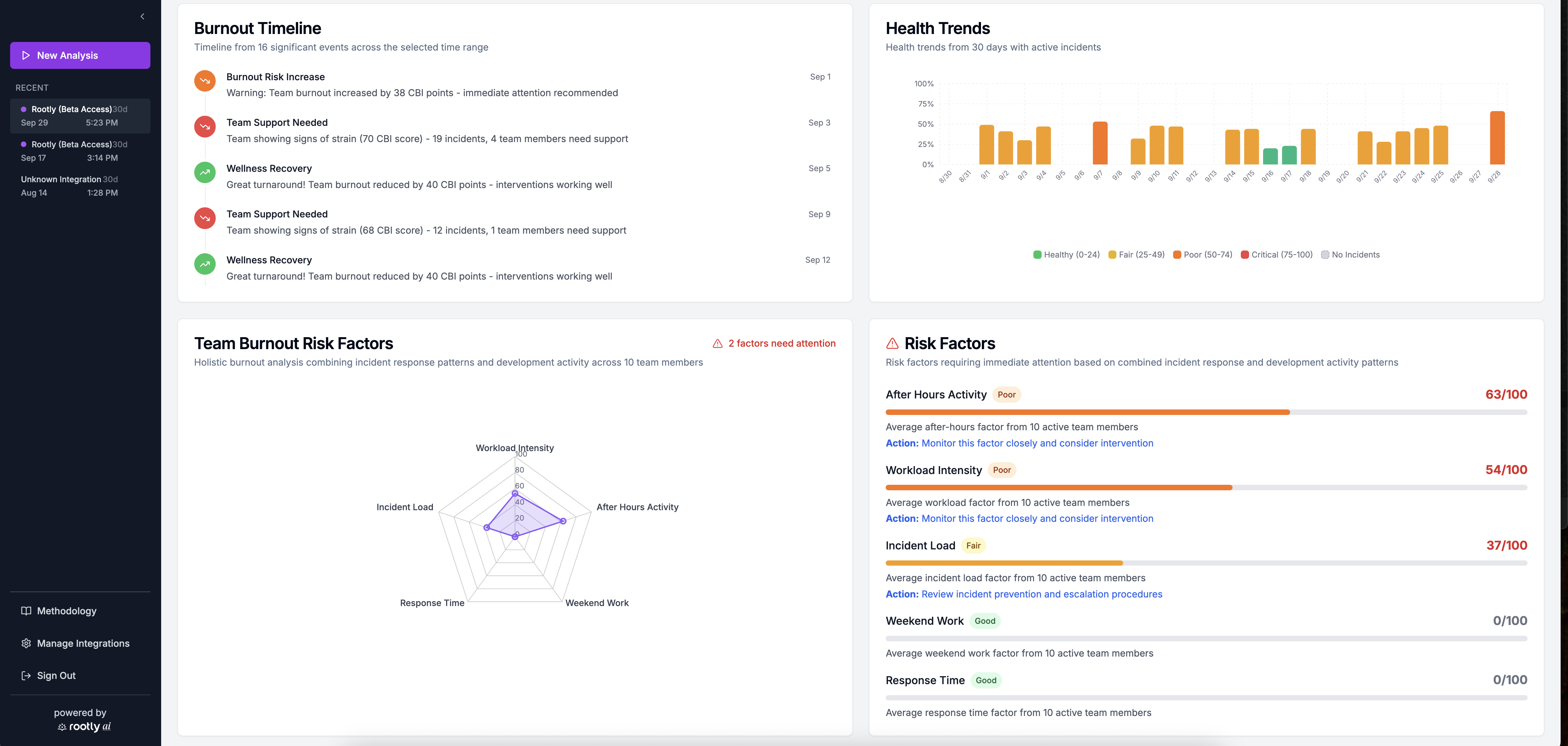

- Multi Layer Signals: Individual and team-level insights

- Interactive Dashboard: Visual and AI-powered risk analysis for incident reponders at the team and individual level

- Tailor to Your organization: Customize tool integration and signal weights

On-call Health takes inspiration from the Copenhagen Burnout Inventory (CBI), a scientifically validated approach to measuring burnout risk in professional settings. The Burnout Detector isn’t a medical tool and doesn’t provide a diagnosis; it is designed to help identify patterns and trends that may suggest overwork.

Our implementation uses the two core dimensions:

-

Personal Burnout

- Physical and psychological fatigue from workload

- Work-life boundary violations (after-hours/weekend work)

- Temporal stress patterns and recovery time deficits

-

Work-Related Burnout

- Fatigue specifically tied to work processes

- Response time, pressure, and incident load

- Team collaboration, stress, and communication quality

- OAuth with Google/GitHub (no password storage)

- JWT tokens for session management

- Encrypted API token storage

- HTTPS enforcement

- Input validation and sanitization

- Rootly: For incident management and on-call data

- PagerDuty: For incident management and on-call data

- GitHub: For commit activity

- Slack: For communication patterns and collect self-reported data

If you are interested in integrating with On-call Health, get in touch!

On-call Health also offers an API that can expose its findings.

On-call Health is built with ❤️ by the Rootly AI Labs for engineering teams everywhere. The Rootly AI Labs is a fellow-led community designed to redefine reliability engineering. We develop innovative prototypes, create open-source tools, and produce research that's shared to advance the standards of operational excellence. We want to thank Anthropic, Google Cloud, and Google DeepMind for their support.

This project is licensed under the Apache License 2.0.